Subsea telerobotics technologies have advanced significantly over the past few decades, driven by the need for remote inspection, maintenance, and research expeditions in underwater environments. Remotely operated vehicles (ROVs) and autonomous underwater vehicles (AUVs) are typically deployed to perform remote tasks in subsea environments that are beyond the reach of human scuba divers. The robots are generally teleoperated from a surface vessel or a base station for applications such as underwater infrastructure inspection, seabed mapping, remote surveillance, environmental monitoring, scientific expeditions, and more.

Existing subsea telerobotics technologies only offer human-to-machine (H2M) control interfaces for communicating mission instructions to the sub. The sole feedback in this process is a delayed sensory update on the teleop console, which is a noisy low-dimensional representation of the environment perceived by the sub (camera feed, sonar scan). Our research focuses on enabling effective and adaptive human-machine collaborative task execution in subsea telerobotics. Beyond direct teleoperation, our goal is to develop an interactive human-machine interface (HMI) for teleoperators to influence the mission planning and execution by subsea ROVs.

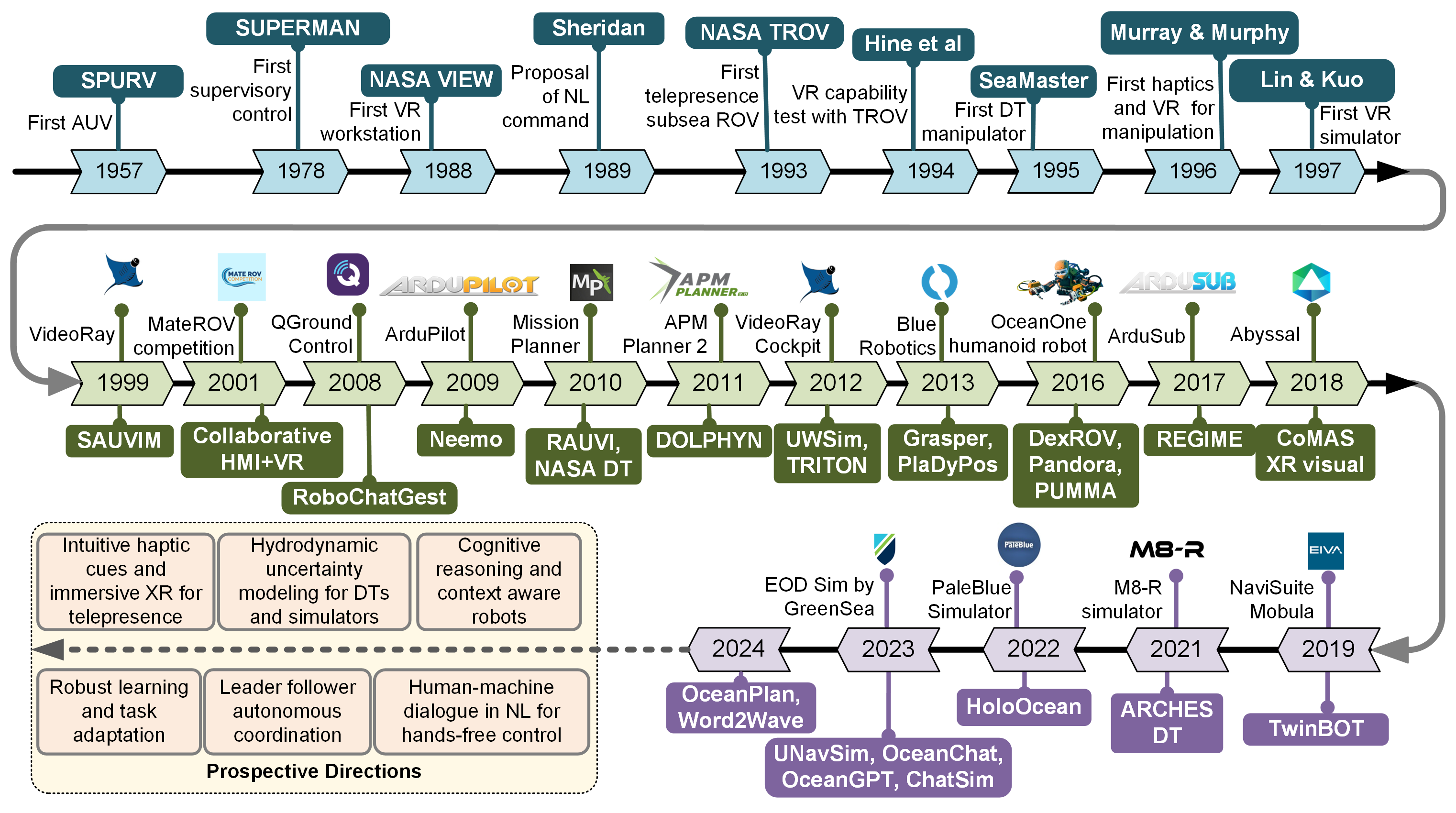

A chronological evolution of subsea HMI technologies is shown. The top row highlights early achievements and milestones. As technology advanced, this century observed industry solutions for interfaces and simulators, incorporating XR, haptic features, and natural language interactions driven by LLMs. Review Paper

EgoExo and EgoExo++

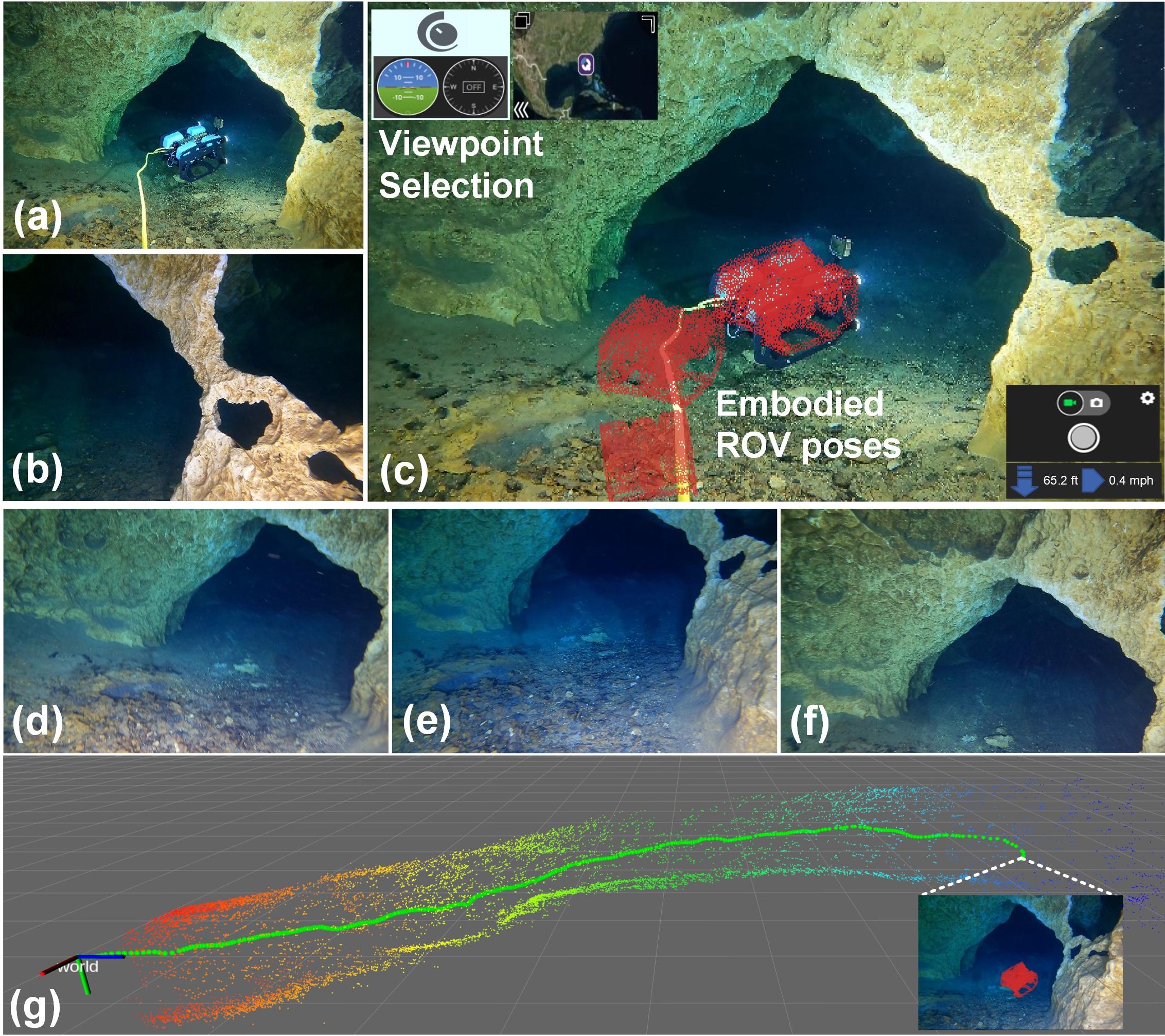

In this project, We introduce an active vision interface that offers three new technologies: (i) interactive view synthesis based on operators' active gaze; (ii) on-demand viewpoint selection; and (iii) SLAM pipeline integration paired with the cross-embodied mission simulator interface. Our proposed Ego-to-Exo (egocentric to exocentric) is an active view generation framework integrated into a visual SLAM system for improved subsea teleoperation. Some features of our proposed interface is shown in the Figure on the right, where (a) an underwater ROV is teleoperated for mapping underwater caves; (b) corresponding egocentric view; (c) Ego-Exo console with embodied robot poses; (d-f) other viewpoints selected by teleoperator; and the (g) SLAM pipeline in the mission simulator. Such on-demand exocentric views offer comprehensive peripheral and global semantics for improved teleoperation. Project page Paper

Moreover, EgoExo++ extends beyond 2D exocentric view synthesis (EgoExo) to augment a dense 2.5D ground surface estimation on-the-fly.

It simultaneously renders the ROV model onto this reconstructed surface, enhancing semantic perception and depth comprehension.

The computations involved are closed-form and rely solely on egocentric views and monocular SLAM estimates, which makes it portable across existing teleoperation engines and robust to varying waterbody characteristics.

See more details on the project page!

Moreover, EgoExo++ extends beyond 2D exocentric view synthesis (EgoExo) to augment a dense 2.5D ground surface estimation on-the-fly.

It simultaneously renders the ROV model onto this reconstructed surface, enhancing semantic perception and depth comprehension.

The computations involved are closed-form and rely solely on egocentric views and monocular SLAM estimates, which makes it portable across existing teleoperation engines and robust to varying waterbody characteristics.

See more details on the project page!

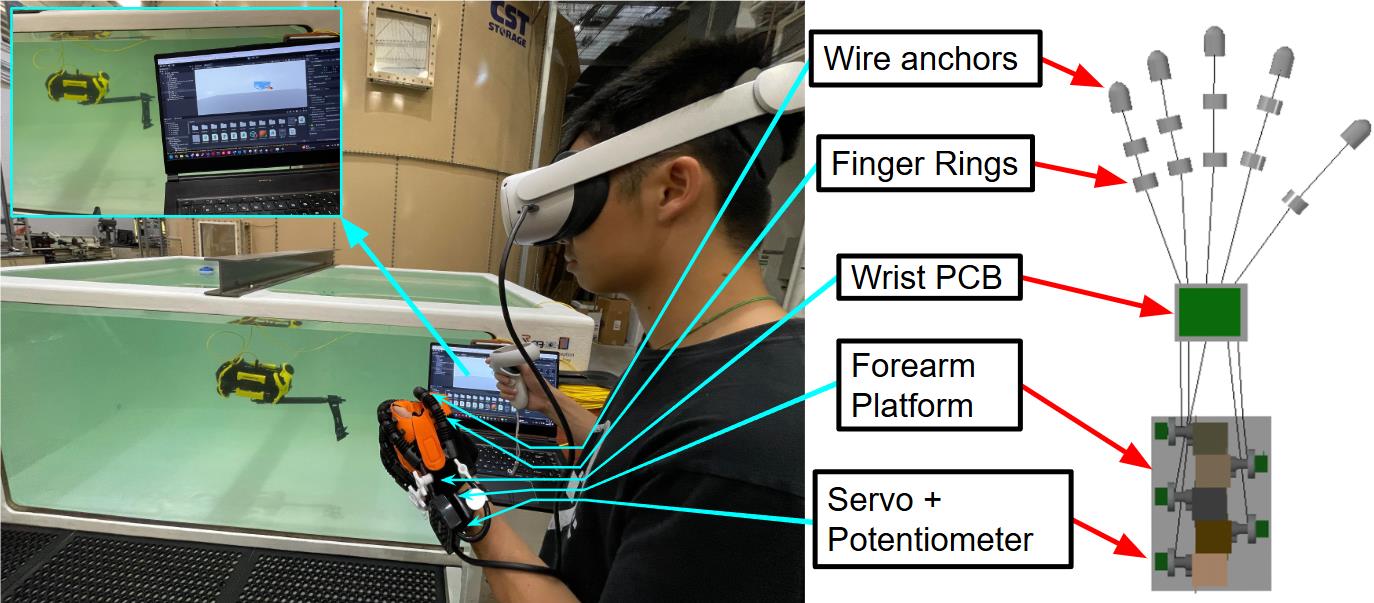

SubSense: A VR-Haptic Framework

This project investigates the integration of haptic feedback and virtual reality (VR) control interfaces to enhance teleoperation and telemanipulation of underwater ROVs. Traditional ROV teleoperation relies on low-resolution 2D camera feeds and lacks immersive and sensory feedback, which diminishes situational awareness in complex subsea environments. SubSense is a novel VR-Haptic framework incorporating a non-invasive feedback interface to an otherwise 1-DOF manipulator, which is paired with the teleoperator's glove to provide haptic feedback and grasp status. Additionally, our framework integrates end-to-end software for managing control inputs and displaying immersive camera views through a VR platform. We address these issues by introducing a novel concept to provide a third-person perspective for We validate the system through comprehensive experiments and user studies, demonstrating its effectiveness over conventional teleoperation interfaces, particularly for delicate manipulation tasks. Specifically, we use a 3-disk Tower of Hanoi (TOH) experimental setup, as shown on the right. Participants operate the ROV in two modes: with (1) traditional first-person view (FPV); and (2) VR-based control with haptic feedback through our SubSense framework. Performance is quantified based on task completion time and the number and severity of object damages. Additionally, participants complete a post-trial survey to subjectively evaluate satisfaction and ease of use for each mode of operation. Our findings indicate that immersive interfaces enhance remote situational awareness and operator embodiment in underwater ROV teleoperation. While preliminary, these results provide a strong foundation for further development and larger-scale evaluations in more complex environments.

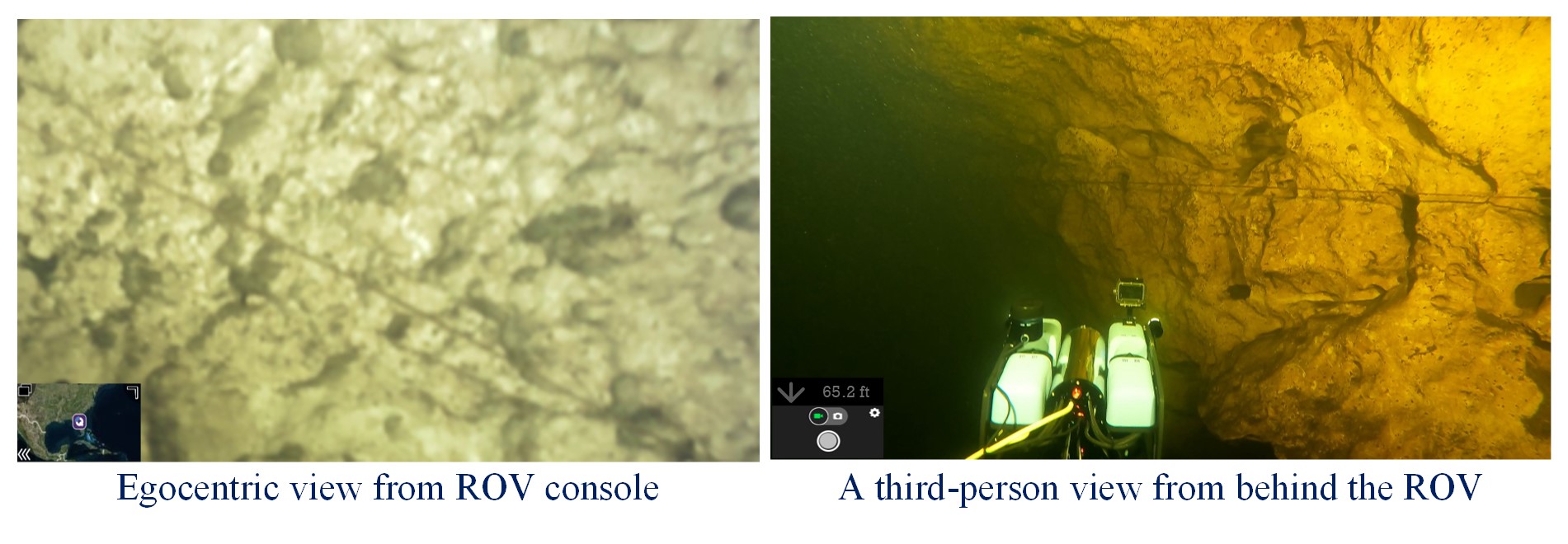

EOB (Eye On the Back)

While the subsea industries and naval defense teams deploy underwater ROVs with high-end cameras and sonars - remote teleoperation remains a challenge in adverse visibility conditions and around complex structures. This is because the typical first-person feed from the camera is not the best view for ROV teleoperation, especially in deep water missions in low-visibility conditions. As illustrated in Figure on the right, a third-person view from behind the ROV provides more informative visuals with peripheral semantics. We address these issues by introducing a novel EOB (Eye On the Back) concept to provide a third-person perspective for remote ROV teleoperation in subsea missions. We demonstrate that third-person camera views from immediately behind the ROV offer significantly more peripheral information than typical first-person feeds alone. We experiment with multiple configurations for an exocentric camera setup (see on the right) in tasks such as subsea structure inspection and underwater cave exploration by a surface operator. We find that the EOB views allow us to design more interactive consoles, which teleoperators can use to find global (exocentric) views, plan complex maneuvers, and conduct efficient missions with higher safety margins. Teleoperation consoles designed with these capabilities will offer flexibility and more interactive features for human-machine collaborative task execution in subsea missions.

As shown in Figure below, we consider an EOB Arc behind the ROV from where third-person perspectives are likely to provide useful peripheral

visuals for safe robot maneuvering. For proof of concept, three EOB configs are considered: two physical

(Config-1, Config-2) and one virtual (Config-n). The first two configs attach dedicated cameras,

either on a rigid rail or wrapped around the tether, thus require physical attachments.

On the other hand, the virtual config is envisioned to provide on-demand views along the arc without any structural modifications.

As shown in Figure below, we consider an EOB Arc behind the ROV from where third-person perspectives are likely to provide useful peripheral

visuals for safe robot maneuvering. For proof of concept, three EOB configs are considered: two physical

(Config-1, Config-2) and one virtual (Config-n). The first two configs attach dedicated cameras,

either on a rigid rail or wrapped around the tether, thus require physical attachments.

On the other hand, the virtual config is envisioned to provide on-demand views along the arc without any structural modifications.

Paper

Paper

Publications

- A. Abdullah, R. Chen, D. Blow, and M. J. Islam. Human-Machine Interfaces for Subsea Telerobotics: from Soda-straw to Natural Language Interactions . In Review, 2024.

- R. Chen, D. Blow, A. Abdullah, and M. J. Islam. SubSense: VR-Haptic and Motor Feedback for Immersive Control in Subsea Telerobotics . The OCEANS 2025 Great Lakes conference 250515 (113), US, 2025.

- A. Abdullah, R. Chen, I. Rekleitis, and M. J. Islam. Ego-to-Exo: Interfacing Third Person Visuals from Egocentric Views in Real-time for Improved ROV Teleoperation. International Symposium on Robotics Research (ISRR), CA, US 2024.

- M. J. Islam. Eye On the Back: Augmented Visuals for Improved ROV Teleoperation in Deep Water Surveillance and Inspection. SPIE Defense and Commercial Sensing, 2024, National Harbor, Maryland, US.