Low-light perception is crucial for visually guided underwater robots because the marine environment often presents challenging lighting conditions that can severely impact visual data quality. Sunlight rapidly diminishes with depth, and even in shallow waters, visibility can be compromised due to murky or turbid water. At greater depths, natural light is virtually non-existent, making it essential for robots to be equipped with low-light perception systems. These systems, including sensitive cameras and specialized sensors, allow robots to capture clear images and perform tasks like navigation, object detection, and environmental monitoring, even in dimly lit conditions. Without such capabilities, robots would struggle to navigate, avoid obstacles, or complete missions that require high-quality visual feedback.

Additionally, low-light perception is vital for specific applications like deep-sea exploration, nighttime operations, and inspection tasks such as the undersides of ships or offshore platforms. For instance, in search-and-rescue missions, robots need to operate in low-visibility environments to locate objects or individuals quickly. Similarly, in marine biology, low-light sensors allow robots to observe species that are active in deep waters or at night. Overall, low-light perception ensures that visually guided underwater robots can function effectively in a wide range of conditions, enhancing mission success and expanding their operational capabilities. We have several active projects looking into finding novel and improved solutions to ensure robust underwater perception by underwater robots.

UDepth Project

Monocular depth estimation is challenging in low-light underwater scenes, particularly on computationally constrained devices.

In the UDepth project, we demonstrate that it is possible to achieve state-of-the-art depth estimation performance while

ensuring a small computational footprint. While the full model offers over 66 FPS inference rates on a single GPU , our domain projection for coarse depth prediction runs at

51.5 FPS rates on single-board Jetson TX2s.

Monocular depth estimation is challenging in low-light underwater scenes, particularly on computationally constrained devices.

In the UDepth project, we demonstrate that it is possible to achieve state-of-the-art depth estimation performance while

ensuring a small computational footprint. While the full model offers over 66 FPS inference rates on a single GPU , our domain projection for coarse depth prediction runs at

51.5 FPS rates on single-board Jetson TX2s.

We also introduce a RMI Input Space by exploiting the fact that red wavelength suffers more aggressive attenuation underwater,

so the relative differences between {R} channel and {G, B} channel values can provide useful depth information for a given pixel.

As illustrated in this figure, differences in R and M values vary proportionately with pixel distances, as demonstrated by six 20 × 20 RoIs selected on the left image.

We exploit these inherent relationships and demonstrate that RMI≡{R, M=max{G,B}, I (intensity)} is a significantly better input space for visual learning pipelines of underwater monocular depth estimation models.

We also introduce a RMI Input Space by exploiting the fact that red wavelength suffers more aggressive attenuation underwater,

so the relative differences between {R} channel and {G, B} channel values can provide useful depth information for a given pixel.

As illustrated in this figure, differences in R and M values vary proportionately with pixel distances, as demonstrated by six 20 × 20 RoIs selected on the left image.

We exploit these inherent relationships and demonstrate that RMI≡{R, M=max{G,B}, I (intensity)} is a significantly better input space for visual learning pipelines of underwater monocular depth estimation models.

FUnieGAN Project

FUnIE-GAN is a GAN-based model for fast underwater image enhancement. It can be used as a visual filter in

the robot autonomy pipeline for improved perception in noisy low-light conditions underwater. In addition to SOTA performance, it

offers over 48+ FPS inference on Jetson Xavier and 25+ FPS on TX2 devices.

FUnIE-GAN is a GAN-based model for fast underwater image enhancement. It can be used as a visual filter in

the robot autonomy pipeline for improved perception in noisy low-light conditions underwater. In addition to SOTA performance, it

offers over 48+ FPS inference on Jetson Xavier and 25+ FPS on TX2 devices.

Despite recent advancements of interactive vision APIs and AutoML technologies, there are no universal platforms

or criteria to measure the goodness of visual sensing conditions underwater to extrapolate the performance bounds

of visual perception algorithms. Our current work attempts to address these issues for real-time underwater robot vision.

More details: coming soon...

Despite recent advancements of interactive vision APIs and AutoML technologies, there are no universal platforms

or criteria to measure the goodness of visual sensing conditions underwater to extrapolate the performance bounds

of visual perception algorithms. Our current work attempts to address these issues for real-time underwater robot vision.

More details: coming soon...

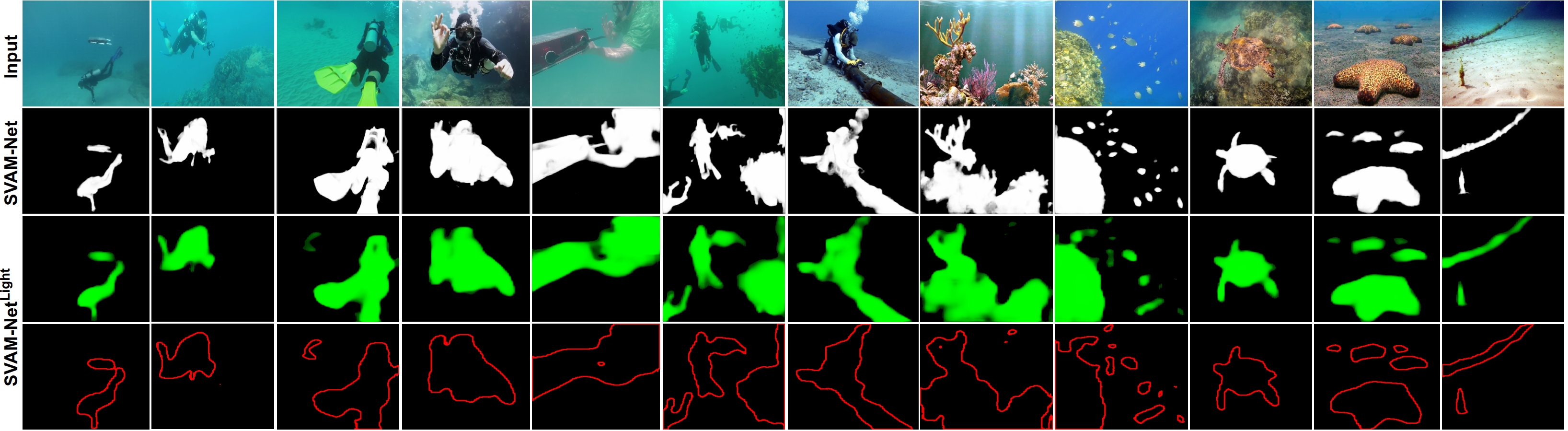

SVAM Project

An essential capability of visually-guided robots is to identify interesting and salient objects in images for

accurate scene parsing and to make important operational decisions. Our work on saliency-guided visual attention modeling (SVAM)

develops robust and efficient solutions for saliency estimation by combining the power of bottom-up and top-down deep visual learning.

Our proposed model, named SVAM-Net, integrates deep visual features at various scales and semantics for effective SOD in natural underwater images.

SVAM-Net incorporates two spatial attention modules to jointly learn coarse-level and fine-level semantic features for accurate salient object detection in underwater

imagery. It provides SOTA scores on benchmark datasets, exhibits better generalization performance on challenging test cases than existing approaches, and achieves fast

end-to-end run-time on single-board machines.

While SVAM is a class-agnostic approach, we are also working on the problem of class-aware visual attention modeling by semantic scene parsing.

We emperically demonstrated that a general-purpose solution of spatial attention modeling can facilitate over 45% faster

processing in robot perception tasks such as salient ROI enhancement, image super-resolution, and uninformed visual search.

We wre currently developing efficient deep visual models to achieve the desired performance bounds.

An essential capability of visually-guided robots is to identify interesting and salient objects in images for

accurate scene parsing and to make important operational decisions. Our work on saliency-guided visual attention modeling (SVAM)

develops robust and efficient solutions for saliency estimation by combining the power of bottom-up and top-down deep visual learning.

Our proposed model, named SVAM-Net, integrates deep visual features at various scales and semantics for effective SOD in natural underwater images.

SVAM-Net incorporates two spatial attention modules to jointly learn coarse-level and fine-level semantic features for accurate salient object detection in underwater

imagery. It provides SOTA scores on benchmark datasets, exhibits better generalization performance on challenging test cases than existing approaches, and achieves fast

end-to-end run-time on single-board machines.

While SVAM is a class-agnostic approach, we are also working on the problem of class-aware visual attention modeling by semantic scene parsing.

We emperically demonstrated that a general-purpose solution of spatial attention modeling can facilitate over 45% faster

processing in robot perception tasks such as salient ROI enhancement, image super-resolution, and uninformed visual search.

We wre currently developing efficient deep visual models to achieve the desired performance bounds.

Publications

- J. Wu, T. Wang, M.A.B. Siddique, M. J. Islam, C. Fermuller, Y. Aloimonos, C. A. Metzler. Single-Step Latent Diffusion for Underwater Image Restoration. IEEE Transactions on Pattern Analysis and Machine Intelligence (T-PAMI), 2025. Presented at ICCP 2025. [pre-print code ] *Impact Factor: 20.8

- B. Yu, J. Wu, and M. J. Islam. UDepth: Fast Monocular Depth Estimation for Visually-guided Underwater Robots. IEEE International Conference on Robotics and Automation (ICRA), 2023, London, UK. [project page pre-print code]

- M. J. Islam, R. Wang, and J. Sattar. SVAM: Saliency-guided Visual Attention Modeling by Autonomous Underwater Robots. Robotics: Science and Systems (RSS), P048, 2022, NYC, NY, US. [pre-print code data bib] (Acceptance Rate: 32%)

- M. J. Islam, Y. Xia, and J. Sattar. Fast Underwater Image Enhancement for Improved Visual Perception. IEEE Robotics and Automation Letters (RA-L*), vol. 5, no. 2, pp. 3227-3234, 2020, doi: 10.1109/LRA.2020.2974710. [code data bib] (Impact Factor: 3.61)