The ability to operate for long periods of time and then return back safely - is a critical feature for Unmanned Underwater Vehicles (UUVs) in many important applications such as subsea inspection, remote surveillance, and seasonal monitoring. A major challenge for a robot in such long-term missions is to estimate its location accurately since GPS signals cannot penetrate the ocean's surface, and Wi-Fi or radio communication infrastructures are not available underwater. Using a dedicated surface vessel for acoustic referencing or coming up to the water surface for GPS signals are power hungry, computationally expensive, and often impossible (in stealth applications). This project makes scientific and engineering advances by using a novel optics-based framework and on-board AI technologies to solve this problem. The proposed algorithms and systems allows underwater robots to estimate their location with GPS-quality accuracy without ever resurfacing. More importantly, these features enables long-term autonomous navigation and safe recovery of underwater robots without the need for dedicated surface vessels for acoustic guidance. Overall, the outcomes of this project contributes to the long-term marine ecosystem monitoring and ocean climate observatory research as well as in remote stealth mission execution for defense applications. This project is supported by the the NSF Foundational Research in Robotics (FRR) program. We are working on this project in collaboration with the FOCUS lab (Dr. Koppal) and APRIL lab (Dr. Shin) at UF.

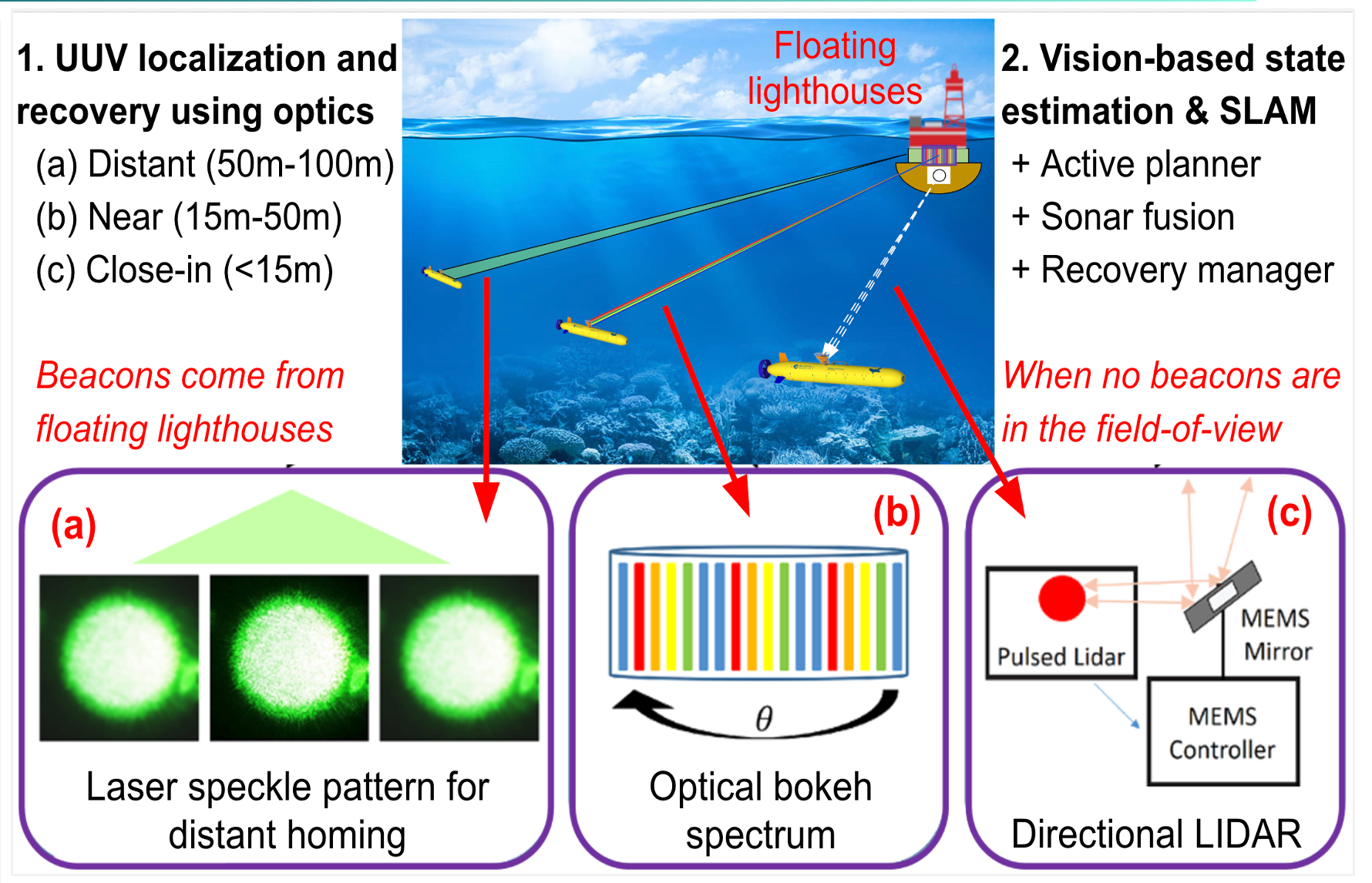

This project advocates a novel solution to the foundational

problem of underwater robot localization and navigation by introducing the notion of `optical homing and penning'.

This new optics-based framework incorporates three sets of novel technologies for

(a) distant UUV (Unmanned Underwater Vehicle) positioning with blue-green laser speckles,

(b) accurate 3D orientation measurements from coded bokeh spectrums, and (c) GPS-quality pose estimates by a

directionally-controlled adaptive LIDAR. The combined optical sensory system will be deployable from

specialized buoys acting as floating lighthouses. An intelligent visual SLAM system will also be

developed for robust state estimation in deep waters when no lighthouse beacons are visible. For feasibility analysis and

assessment, this project will formalize real-world deployment

strategies on two UUV platforms through comprehensive ocean trials in the northern Gulf of Mexico and the Atlantic Ocean.

This project advocates a novel solution to the foundational

problem of underwater robot localization and navigation by introducing the notion of `optical homing and penning'.

This new optics-based framework incorporates three sets of novel technologies for

(a) distant UUV (Unmanned Underwater Vehicle) positioning with blue-green laser speckles,

(b) accurate 3D orientation measurements from coded bokeh spectrums, and (c) GPS-quality pose estimates by a

directionally-controlled adaptive LIDAR. The combined optical sensory system will be deployable from

specialized buoys acting as floating lighthouses. An intelligent visual SLAM system will also be

developed for robust state estimation in deep waters when no lighthouse beacons are visible. For feasibility analysis and

assessment, this project will formalize real-world deployment

strategies on two UUV platforms through comprehensive ocean trials in the northern Gulf of Mexico and the Atlantic Ocean.

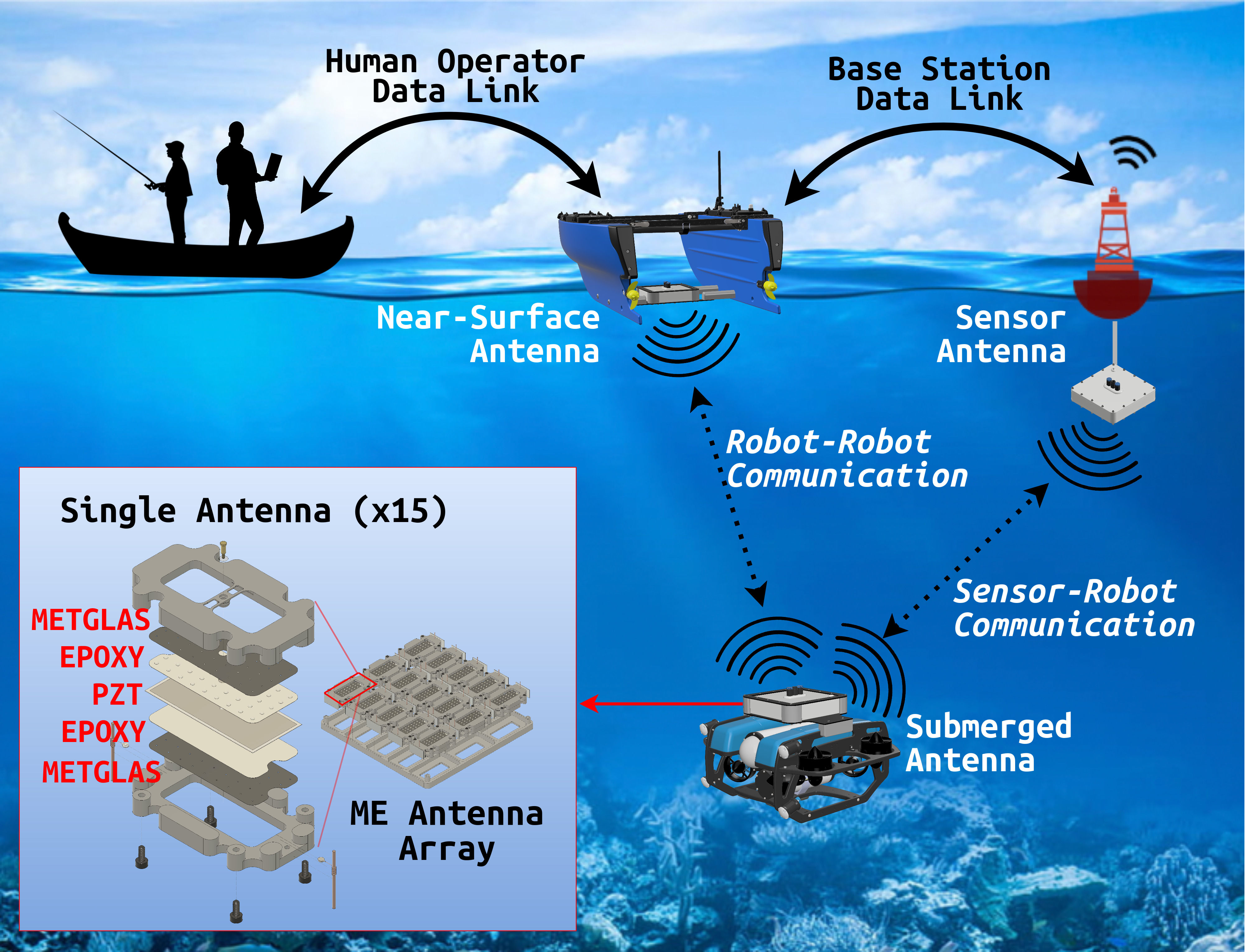

BlueME Project

We present the design, development, and experimental validation of BlueME, a compact magnetoelectric (ME) antenna array system for underwater robot-to-robot communication. BlueME employs ME antennas operating at their natural mechanical resonance frequency to efficiently transmit and receive very-low-frequency (VLF) electromagnetic signals underwater. To evaluate its performance, we deployed BlueME on an autonomous surface vehicle (ASV) and a remotely operated vehicle (ROV) in open-water field trials. Our tests demonstrate that BlueME maintains reliable signal transmission at distances beyond 200 meters while consuming only 1 watt of power. Field trials show that the system operates effectively in challenging underwater conditions such as turbidity, obstacles, and multipath interference-- that generally affect acoustics and optics. This work represents the first practical underwater deployment of ME antennas outside the laboratory, and implements the largest VLF ME array system to date. Project Page

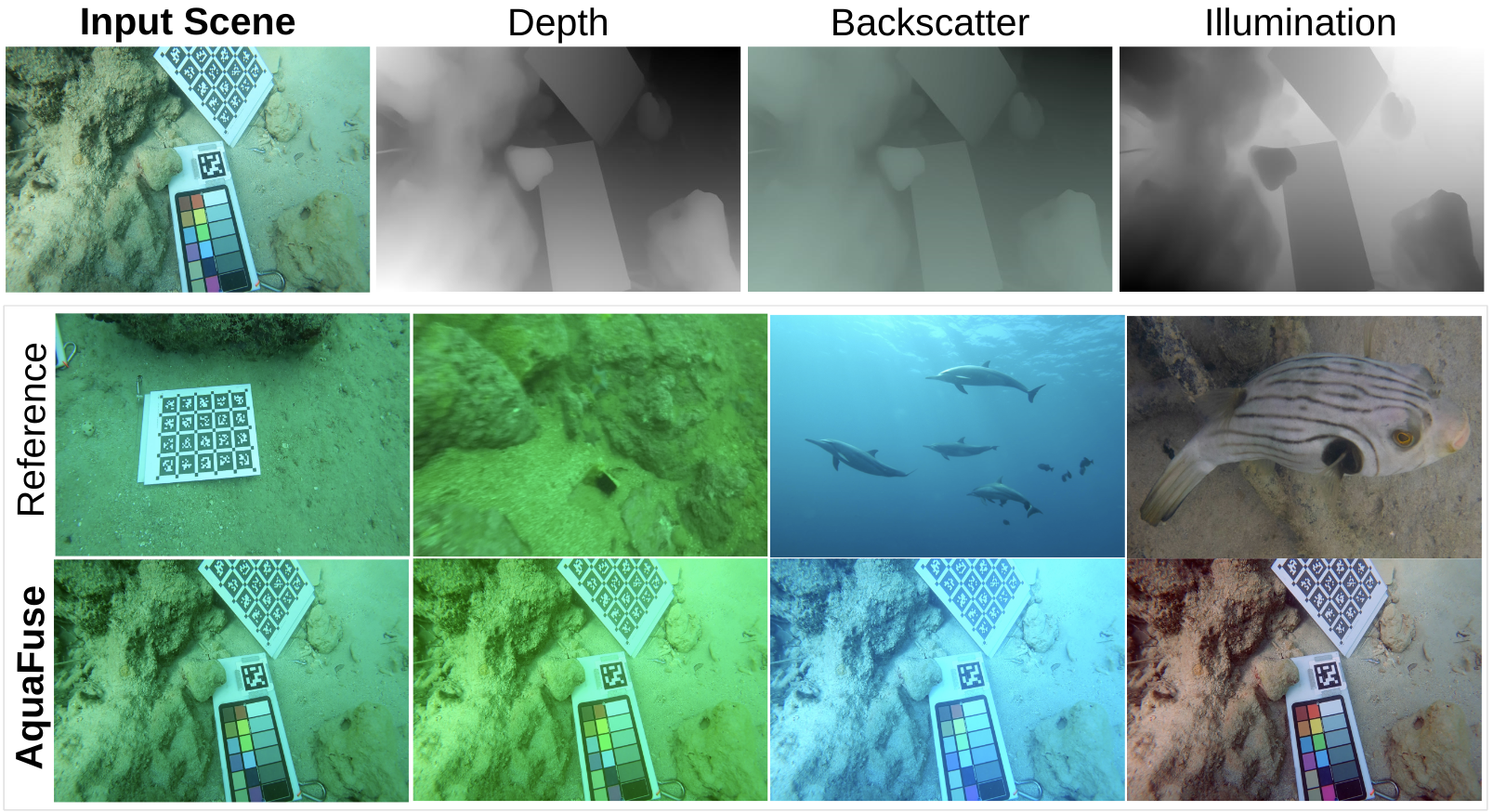

AquaFuse Project

We introduce the idea of AquaFuse, a physically accurate method for synthesizing and transferring waterbody style in underwater imagery. AquaFuse leverages the physical characteristics of light propagation underwater for synthesizing the scene into object content and waterbody. Subsequently, the decoupled object content is rendered into other waterbody types for generating different scenes with the same object geometry. It is a new technique for domain-aware data augmentation by fusing waterbody across different scenes. The traditional approaches rely on perspective or isometric constraints (rotations, translations, scaling) or altering basic photometric properties (brightness, color, contrast). We hypothesize that physically accurate waterbody fusion can be an effective way to augment data for underwater image recognition and scene rendering research. Project page

Wor2Wave Project

This project explores the design and development of a language-based interface for dynamic mission programming of autonomous underwater vehicles (AUVs). The proposed Word2Wave (W2W) framework enables interactive programming and parameter configuration of AUVs for remote subsea missions. The proposed learning pipeline adapts an SLM named T5-Small that can learn language-to-mission mapping from processed language data effectively, providing robust and efficient performance. In addition to a benchmark evaluation with state-of-the-art, we conduct a user interaction study to demonstrate the effectiveness of W2W over commercial AUV programming interfaces. W2W opens up promising future research opportunities on hands-free AUV mission programming for subsea deployments. Project page

Publications

- A. Abdullah, A. Gupta, V. Ramesh, S. Patel, and M. J. Islam. NemeSys: An Online Underwater Explorer with Goal-Driven Adaptive Autonomy. In Review, 2025.

- J. Wu, T. Wang, M.A.B. Siddique, M. J. Islam, C. Fermuller, Y. Aloimonos, C. A. Metzler. Single-Step Latent Diffusion for Underwater Image Restoration. IEEE Transactions on Pattern Analysis and Machine Intelligence (T-PAMI), 2025. Presented at ICCP 2025. [pre-print code ] *Impact Factor: 20.8

- V. Ramesh, H. Wang, and M. J. Islam. HiRQA: Hierarchical Ranking and Quality Alignment for Opinion-Unaware Image Quality Assessment. In Review, 2025.

- Y. Zhang, A. Abdullah, S. J. Koppal, and M. J. Islam. VL-Explore: Zero-shot Vision-Language Exploration and Target Discovery by Mobile Robots. In Review, 2025.

- R. Chen, D. Blow, A. Abdullah, and M. J. Islam. SubSense: VR-Haptic and Motor Feedback for Immersive Control in Subsea Telerobotics. The OCEANS 2025 Great Lakes conference 250515 (113), US, 2025.

- V. Ramesh, J. Liu, H. Wang, and M. J. Islam. DGIQA: Depth-guided Feature Attention and Refinement for Generalizable Image Quality Assessment. In Review, 2025.

- M.A.B. Siddique, V. Ramesh, J. Liu, P. Singh, and M. J. Islam. UStyle: Waterbody Style Transfer of Underwater Scenes by Depth-guided High-Fidelity Feature Synthesis. In Review, 2025. [code project page ]

- A. Abdullah, R. Chen, D. Blow, T. Uthai, E. Du, and M. J. Islam. Human-Machine Interfaces for Subsea Telerobotics: from Soda-straw to Natural Language Interactions. In Review, 2025.

- A. Abdullah, R. Chen, I. Rekleitis, and M. J. Islam. Ego-to-Exo: Interfacing Third Person Visuals from Egocentric Views in Real-time for Improved ROV Teleoperation. International Symposium on Robotics Research (ISRR), CA, US 2024.

- M.A.B. Siddique, J. Wu, I. Rekleitis, and M. J. Islam. AquaFuse: Waterbody Fusion for Physics Guided View Synthesis of Underwater Scenes . IEEE Robotics and Automation Letters (RA-L), vol. 10 , no. 5, 2025. [pre-print project page ] *Impact Factor: 5.2

- M. Talebi, S. Mahmud, A. Khalifa, and M. J. Islam. BlueME: Robust Underwater Robot-to-Robot Communication Using Compact Magnetoelectric Antennas . In Review, 2025.

- R. Chen, D. Blow, A. Abdullah, and M. J. Islam. Word2Wave: Language Driven Mission Programming for Efficient Subsea Deployments of Marine Robots. IEEE International Conference on Robotics and Automation (ICRA), 2025.