In News!

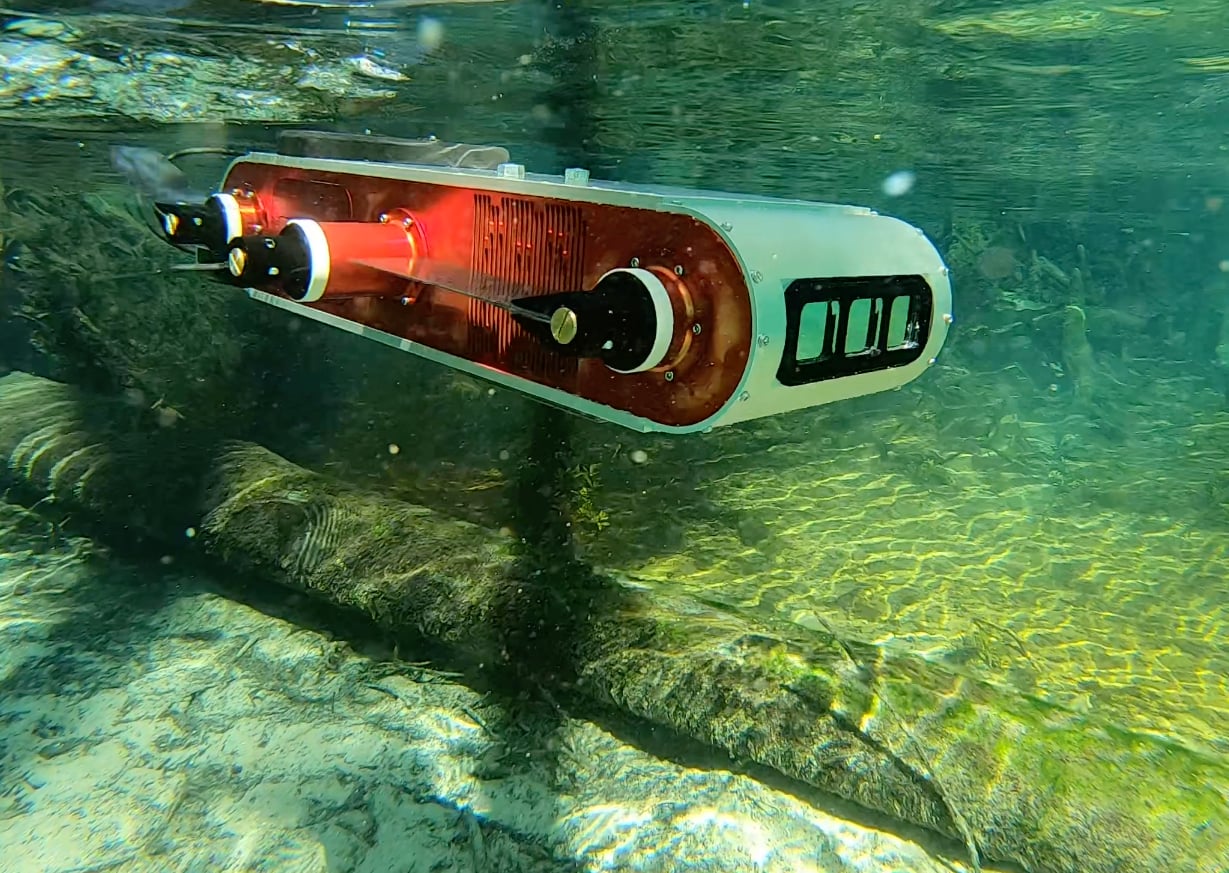

[8/25]: RoboPI Lab - Taking AI into the Deep!

[12/24]: Dr. Islam Named Yangbin Wang Endowed Rising Star Assistant Professor at ECE, UF.

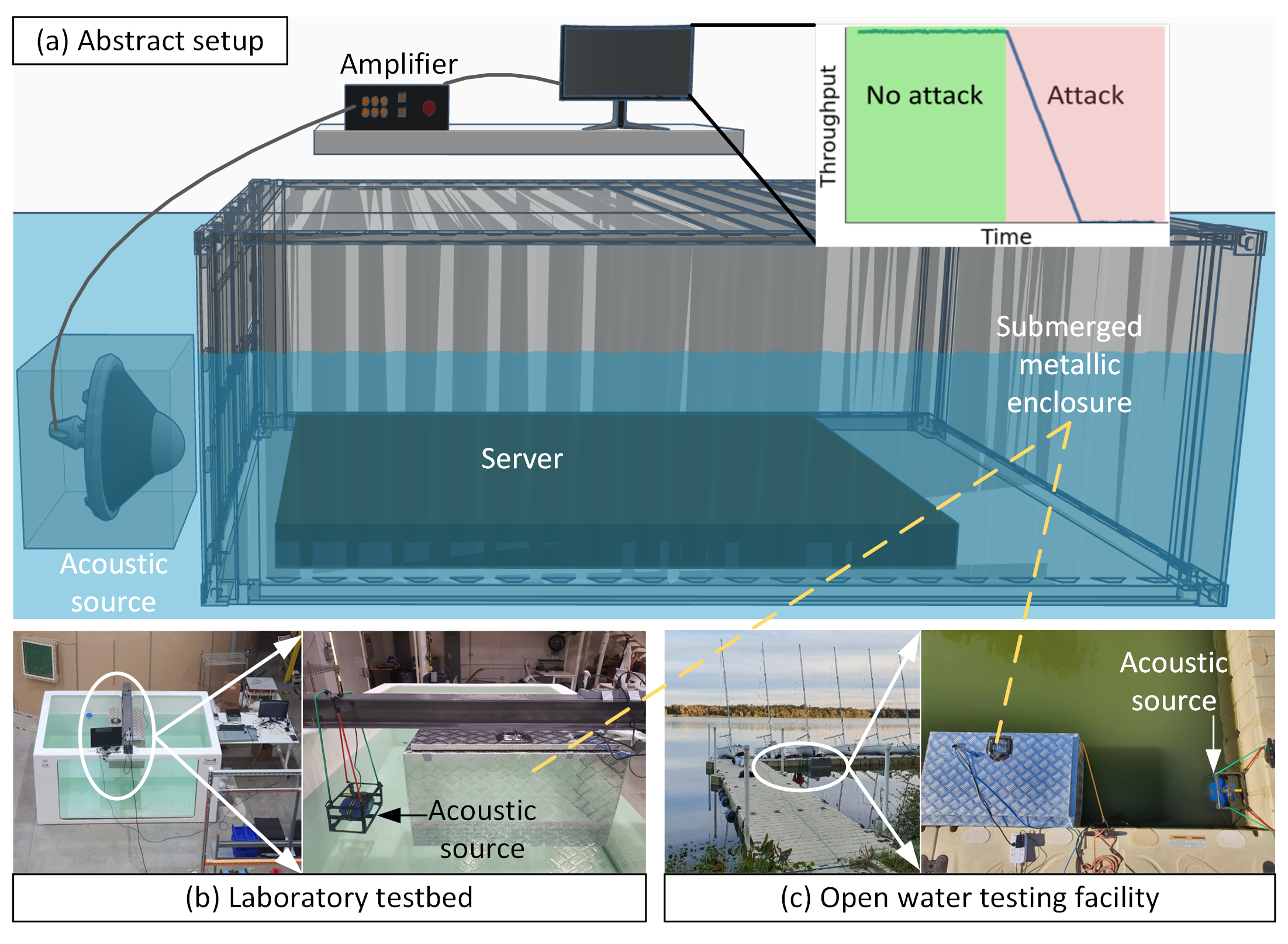

[7/24]: Our research on safeguarding underwater data centers!

[3/23]: RoboPI Lab, Dept. of ECE, College of Engineering, at UF

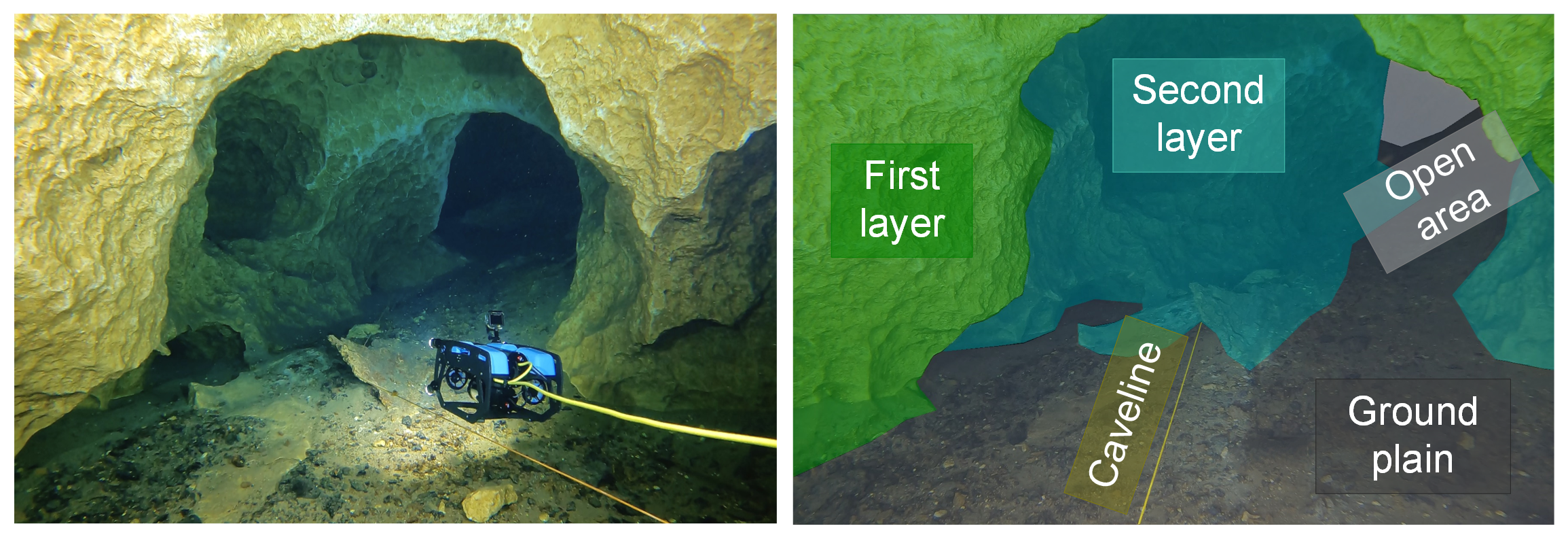

Robots revolutionize cave cartography

Robots revolutionize cave cartography

Updates

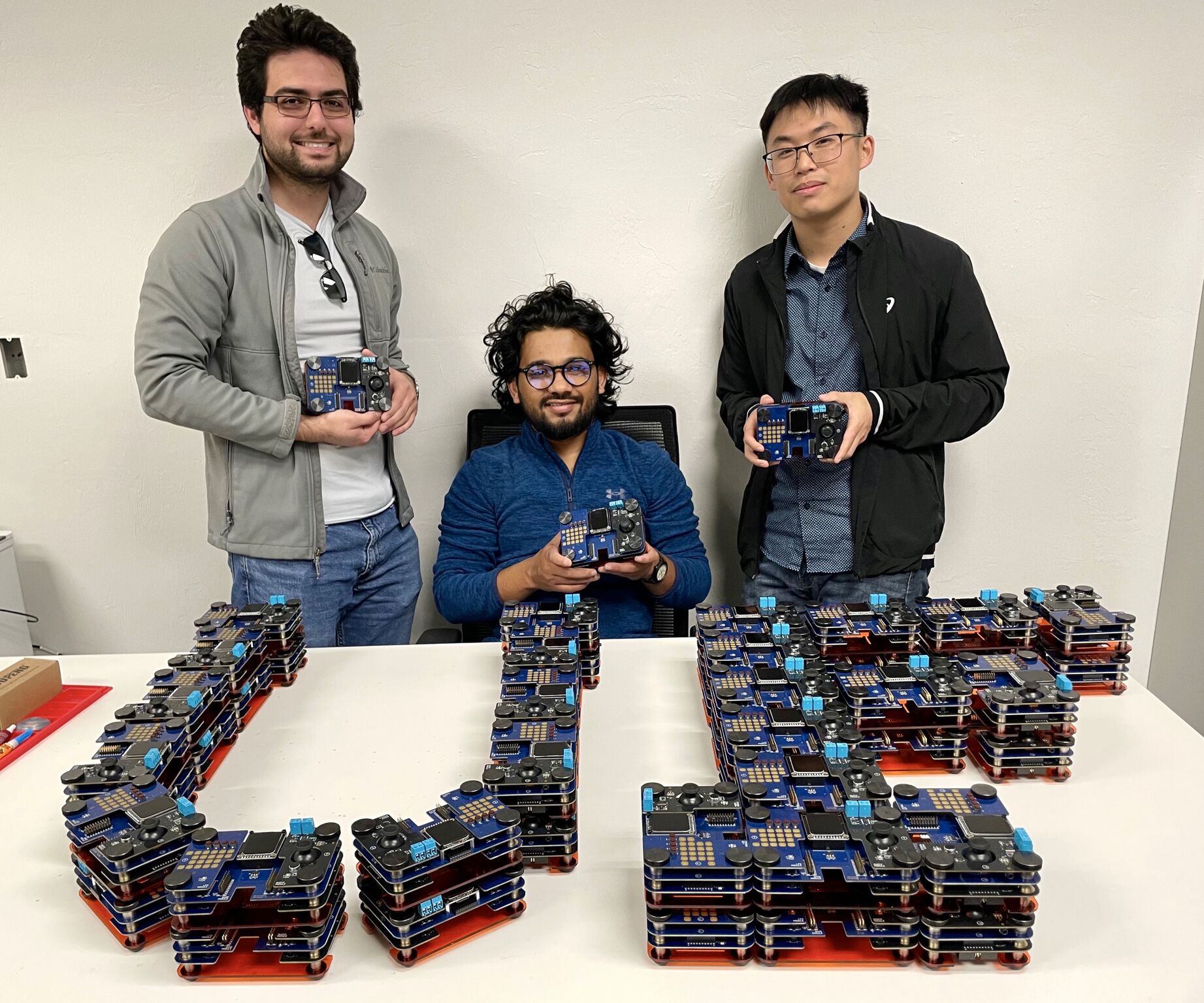

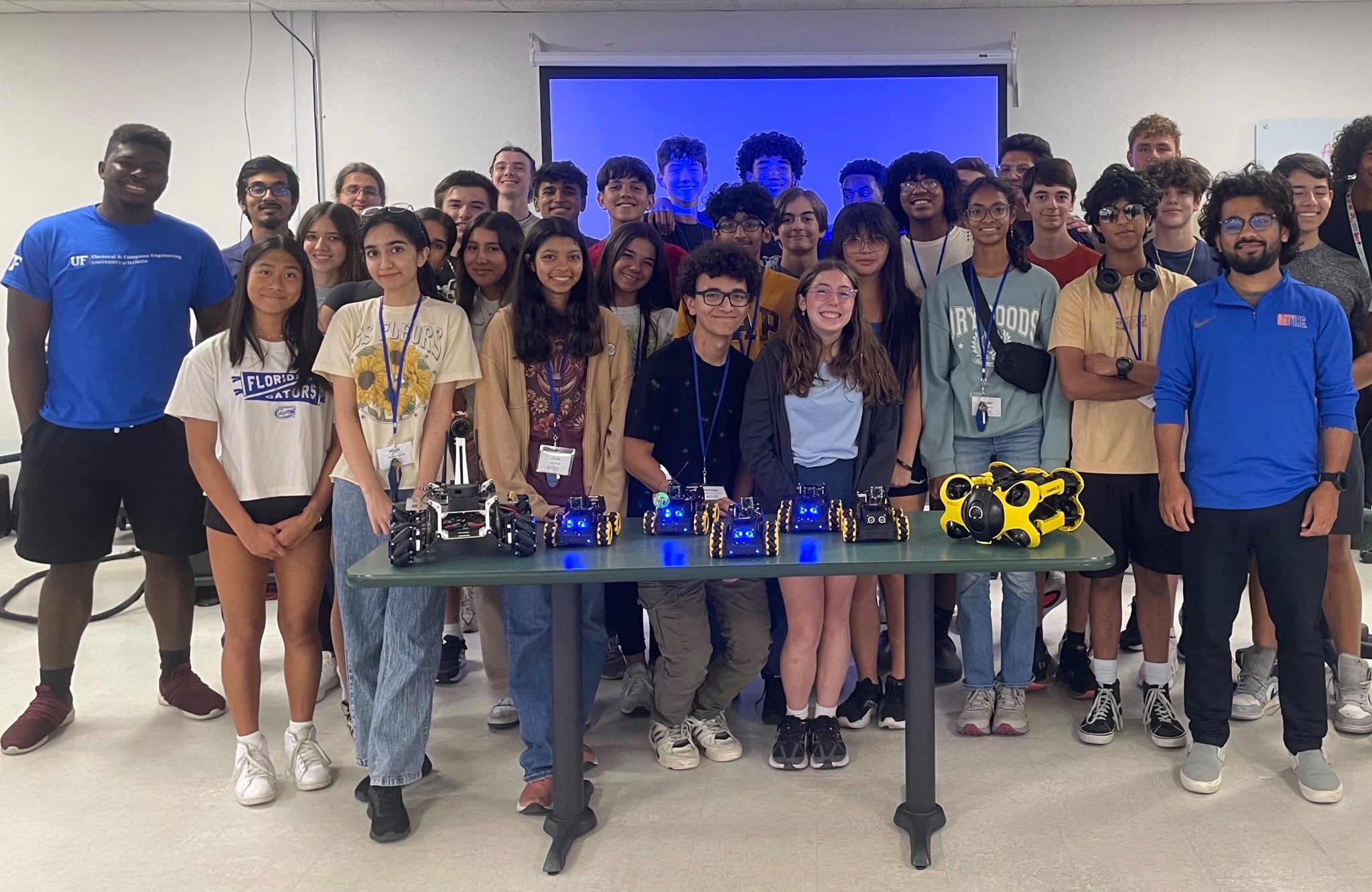

June 2025:

A productive K-12 'RoboGator Day' workshop with RISE program at CPET, UF.

May 2025:

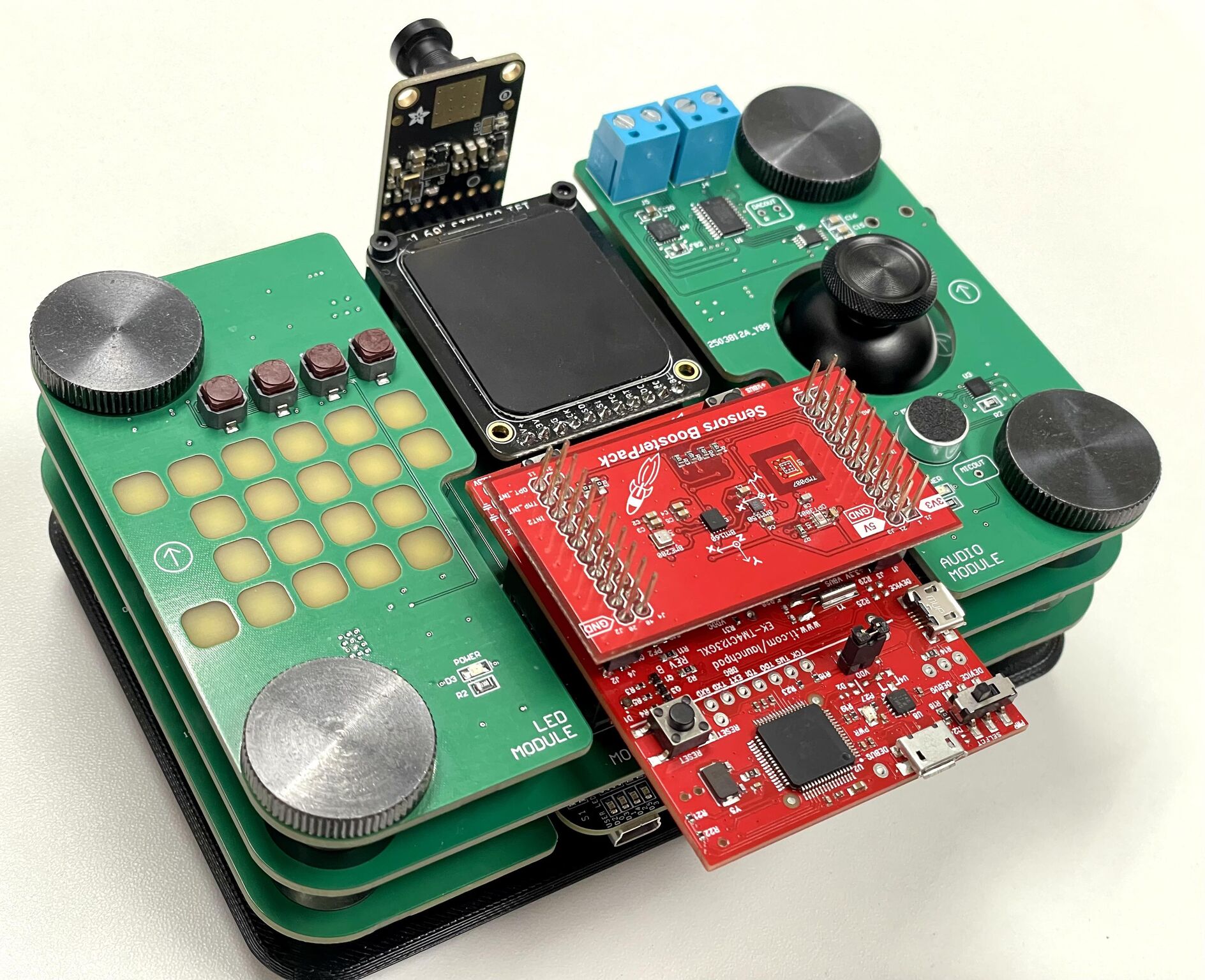

Word2Wave is presented at the ICRA 2025 in Atlanta!

April 2025: Our CavePI paper got accepted for publication at the RSS-2025!

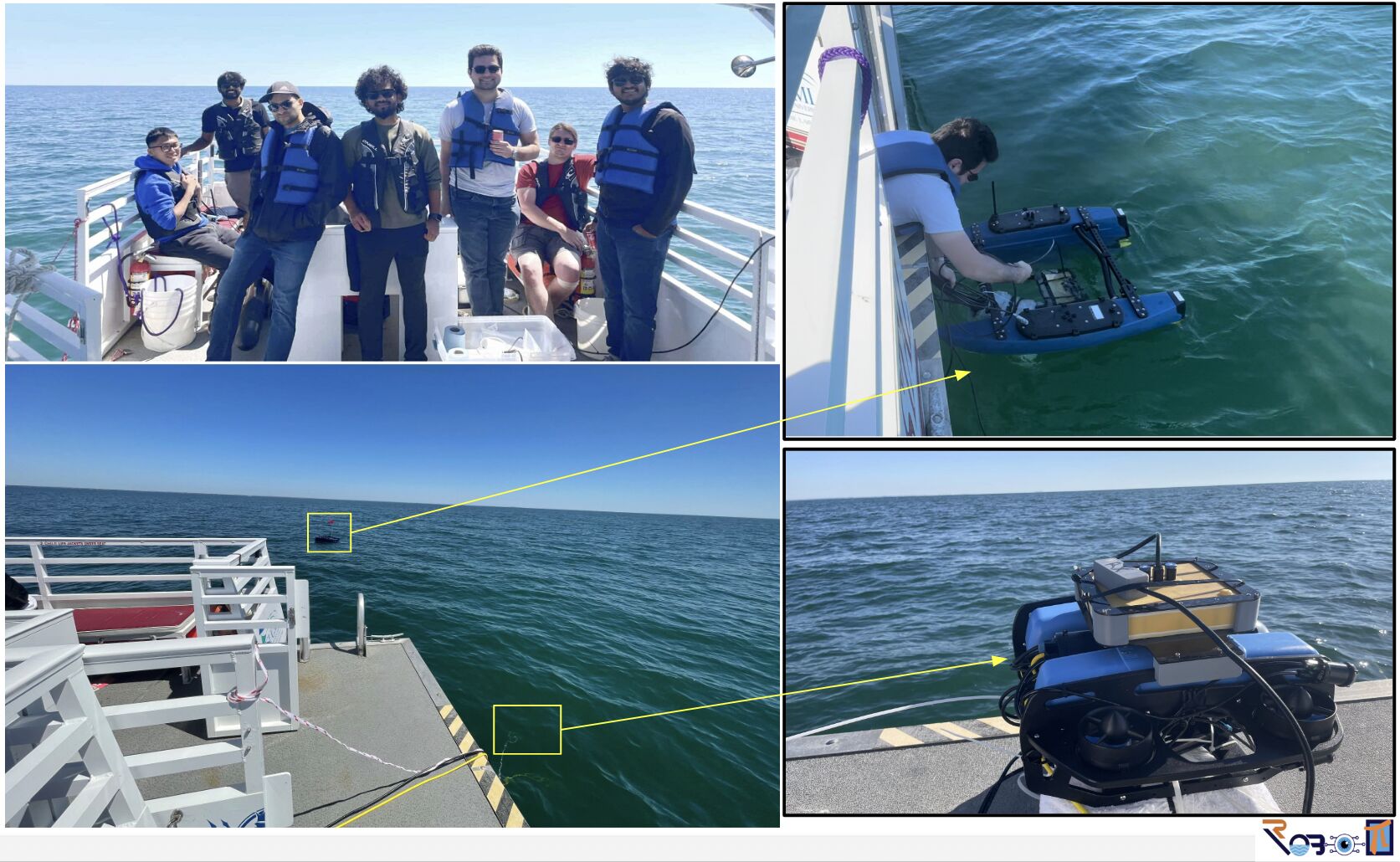

Mar 2025:

Groundbreaking results from our BlueME ocean trials! (LinkedIn)

Mar 2025:

We conducted robotics trials in Miami for subsea mapping project! (LinkedIn)

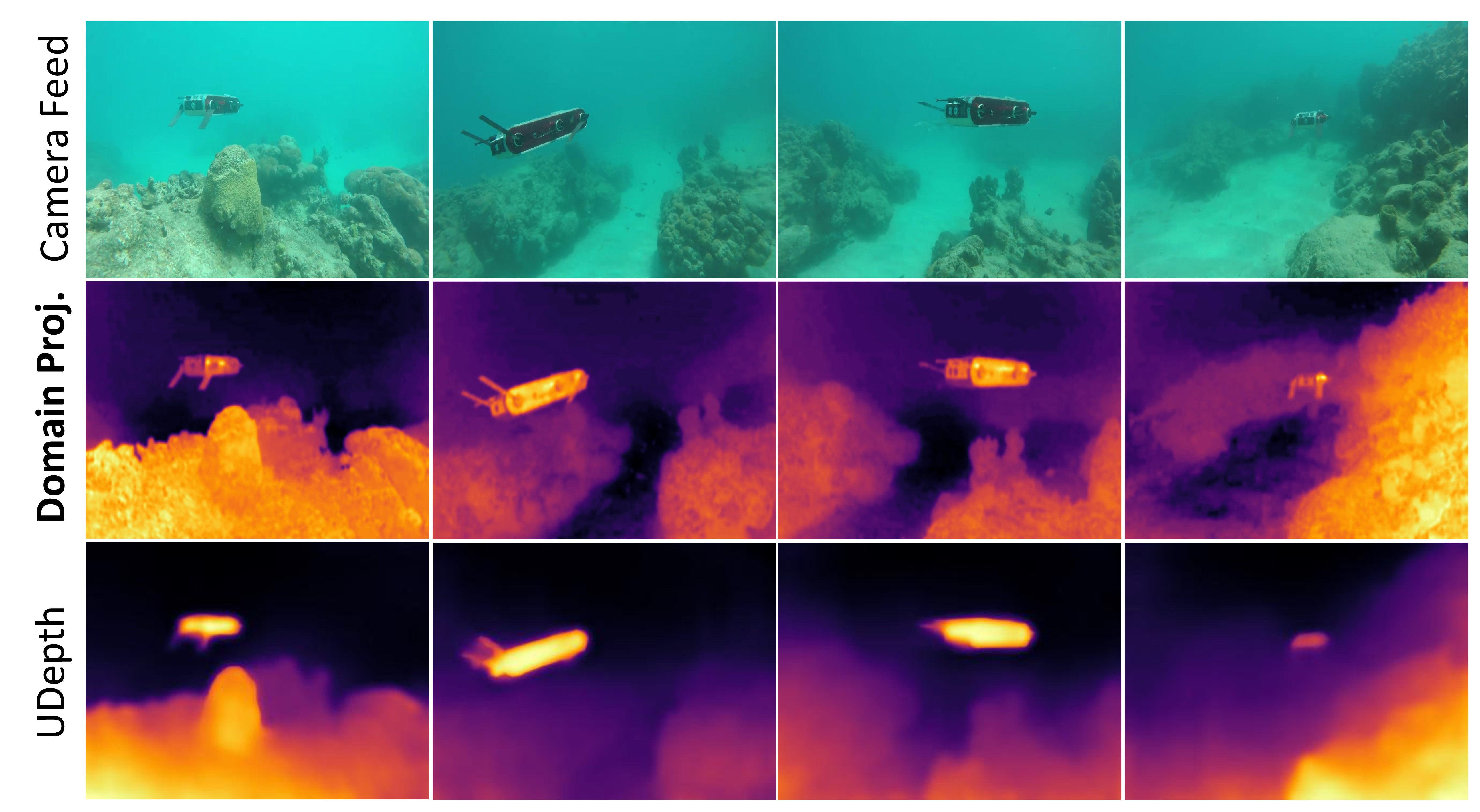

Mar 2025: Our AquaFuse paper got accepted for publication at the IEEE RA-L. [IF: 5.2]

Mar 2025: Dr. Islam held a seminar at Woods Hole Oceanographic Institution (WHOI).

Mar 2025: Our LightViz work got accepted at the Springer Nature EMA. [IF: 3.1]

Feb 2025: Dr. Islam held a seminar at the Maryland Robotics Center (MRC), UMD.